What the human eye observes casually and incuriously, the eye of the camera notes with relentless fidelity.” – Bernice Abbott

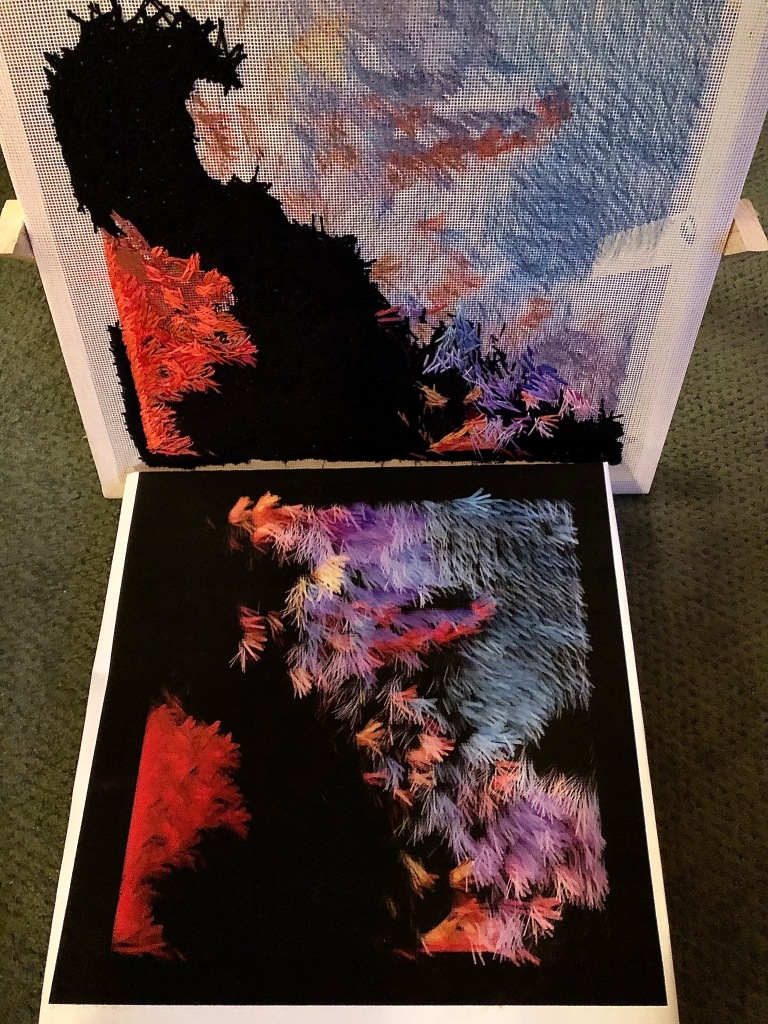

Lately I’ve been reading about the nature of light, specifically the light in (or is it of?) pixels. This is part of a larger project I’ve embarked on with David Young. (See Negotiating the Image with Pixels and Thread.) David produces exquisitely elaborated digital images that he co-creates with his custom-designed AI/machine learning programs. To date, our work has been built around his manipulated images of my darning (a form of woven mending), which I’ve been translating back into an analog state by stitching the computer-generated images onto canvas.

I’m not interested in bringing the darn full circle, i.e., from cloth to printed image to stitched image. Rather than trying to produce a faithful replica, I’m interested in the process as a translation. I’m producing an analog version of what the AI does with the images it is fed. Both the computer and I produce distortions. I do it with thread and the computer does it with pixels. But is this a child’s game of telephone or a double transubstantiation?

Precisely because I understand so little about the computer’s ‘translation’ of my crosshatched threads, I’ve become especially curious about the nature of pixels I’m stitching. Given that the same digital image can morph from fuzzy to spikey, from dense to sparse, when it’s manipulated on the screen, I wonder if a pixel behaves like a musical note which can be extended, dampened with a pedal, or sharpened by a quick touch. Is there latent information in pixels that accounts for the variability in their appearance from one shift of a button to the next?

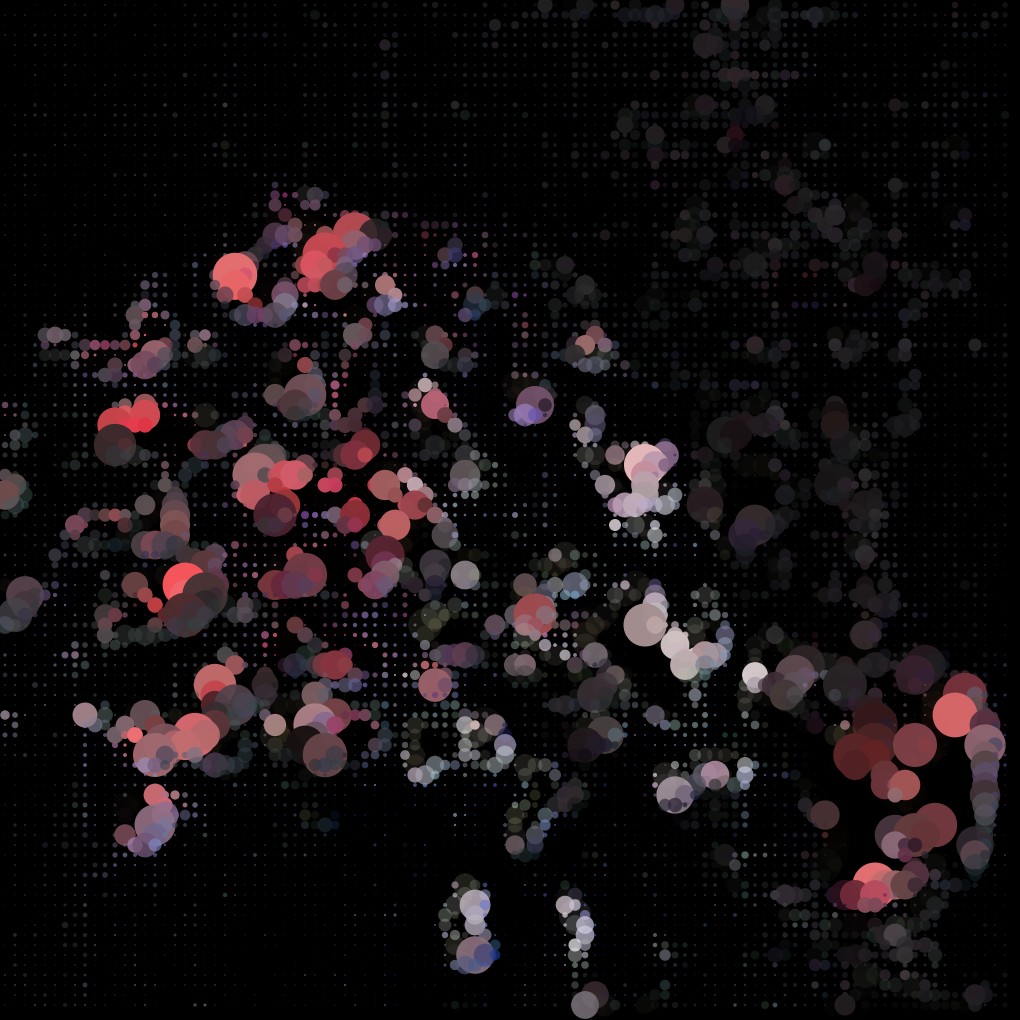

Digital images of a darn

I’ve tried reading Alvy Ray Smith’s A Biography of the Pixel and am still trying. I’ve also bumbled around on Internet sites. They’re like foreign language phrase books, good for discrete definitions but no help with integrating them into a sensible conversation.

Finally, I called on my friend Hugh Dubberly for help. A design luminary in the digital realm and an excellent (a.k.a., supremely patient) educator, I hit the jackpot when he said yes. I won’t rehearse his lesson (conducted largely over a two and a half hour Zoom), except to share the most relevant nuggets of the processes involved in producing digital color photographs. (Apologies to Hugh, in advance, for the quality of my translation; and apologies to readers who find this tedious.)

- Natural light (in my case. bouncing off my stitching) enters the camera lens.

- That analog light is interpreted by sensors.

- Three sets of sensors are needed for color images to be constructed and seen.

- Each set has a different color filter (a thin piece of plastic): red, green, or blue.

- Clusters of these RGB sensors are arranged in a grid. In other words, there are many sets of sensors behind the camera’s lens.

- Each sensor measures the brightness value of the light it receives and translates it to a digital (a.k.a., numerical) value.

- That information is stored in the camera/computer.

- When the user (I hate that term but it will have to suffice) retrieves that image (brings it up on their screen), they have a choice about how to display the stored pixels (a.k.a., stored ratios of filtered light intensity).

- That choice is exercised by changing (or not) the numerical values measured by the sensor’s filters.

- What we see is a translation of numerical data to images made of light on our screens.

- Screens are made up of display elements.

- A display element can hold different numbers of pixels.1

- Changing the ratios between pixels and the screen’s display elements alters the image. (This is probably ‘coals to Newcastle’ for most readers but it was helpful information for me.)

- Each pixel offers up to 256 choices, which together make up the pixel’s bit depth.

- Together the joint RGB pixel offers 16.7 million or 224 (two to the power of 24) choices of color.2

This last point was the one that answered the question I started with: ‘Is there latent information in pixels that accounts for the variability in their appearance from one shift of a button to the next?’ What I was calling ‘latent information in pixels’ was, in fact, their dimensionality, their depth. And the clue was that pixel size is calculated by square roots. I remembered enough high school math to recognize that squaring a number—in the case of a pixel: 224—describes a three-dimensional space. (I also remember enough physics to know that light has velocity which entails time, as well as the dimensions of its waves; so light is multi-dimensional.) So my suspicions were correct (if not my reasoning): there is real depth in the image, at least on the screen. When the image is printed, I see it as an illusion but no more of an illusion than any other photograph.

I also recognize that there is an element of absurdity in this labored examination of the pixel. But in order to get a grip on what I’m doing, I find that, to paraphrase Roland Barthes’ observation in Camera Lucida, I have to work in two modes: one of calculations (available to anyone who knows where to look or who to talk to) and one of singularity to replenish such those calculations “with the élan of an emotion which belongs only to myself.”3

Now I think have some comprehension of the variability possible in a single image that David retrieves from his AI/ML operations. However, it doesn’t lessen my fascination with them. Understanding them as calculations of measurements of light doesn’t denature them in the slightest. For there is another factor at work that I haven’t mentioned: the human eye/brain. Specifically, mine.

It has only recently dawned on me that this on-going project that David and I call “Echo Chambers” mirrors a predilection I’ve had for as long as I can remember. Namely, the near futile effort of trying to tease out specific elements in a densely packed space.

In fact, I had a dream as a child in which lines would cross over each other against a white background. (N.B., there were no computer screens in 1955, so it was just a blank field.) The dream would turn into a nightmare when the lines got fuzzy and tangled and I couldn’t tell them apart. Then it made me anxious; now it gives me pleasure. Maybe it was a case of early optical migraines (which I still have) or maybe I was seeing floaters. Or, even more likely, it was a dream about my Etch A Sketch toy. (Though the red case, which you can see a glimpse of in the image above. wasn’t in the dream.) In any case, it seems I come this attraction to compressed spaces honestly.

I even went through a spell of constructing those spaces in the late 1970s. I’d just moved to New York City and was struck by the patterns of the fire escapes that cross-hatched so many buildings. It is also telling that I chose to describe that appearance of compression with a textile, specifically lines of ribbon, pinned onto monofilament, which was attached to opposite walls with nails. (Not sure what my landlord made of the scarification of the bedroom that had to double as a studio after we moved out.)

Decades later, my attempt to derive stitched versions of pixels is yet another attempt to tease multiple layers of lines apart. Only this time I’m using thread to see into the patterns made by lines of light.

And, of course, thread has its own degrees of luminosity and spatial dimensions. Paint or resin might capture light more effectively (I’m sure it would) but those methods would involve an aqueous process. I find that constructing with colored threads better approximates the way pixels construct an image. I am not shaping the thread into the square units but I am pulling it through a mesh of square openings. The canvas is my display screen; I manipulate the ratio of stitches to the display screen, creating coarser and finer versions of the printed digital image.

Whether this has any meaning beyond the material satisfaction it affords me (and possibly others) is an open question. For the present, I prefer to think of the stitched surface as a very modest exploration of the threaded textile as mediator as well as a medium in itself.

Notes

- Typically, one display element maps to one image pixel; however, when images are scaled on screen, then several pixels might be mapped to one display element (for zooming out)or one pixel might be shown by several display elements (for zooming in).

- That is, 256 possible Red levels x 256 Green levels x 256 Blue levels is 16.7 million possible combinations.

- Roland Barthes. Camera Lucida: Reflections on Photography. Trans. Richard Howard. (New York: Farrar, Strauss & Giroux, 1982) 76.